Self-Driving Tesla Mistakes Train Tracks for Road, Raises Safety Concerns

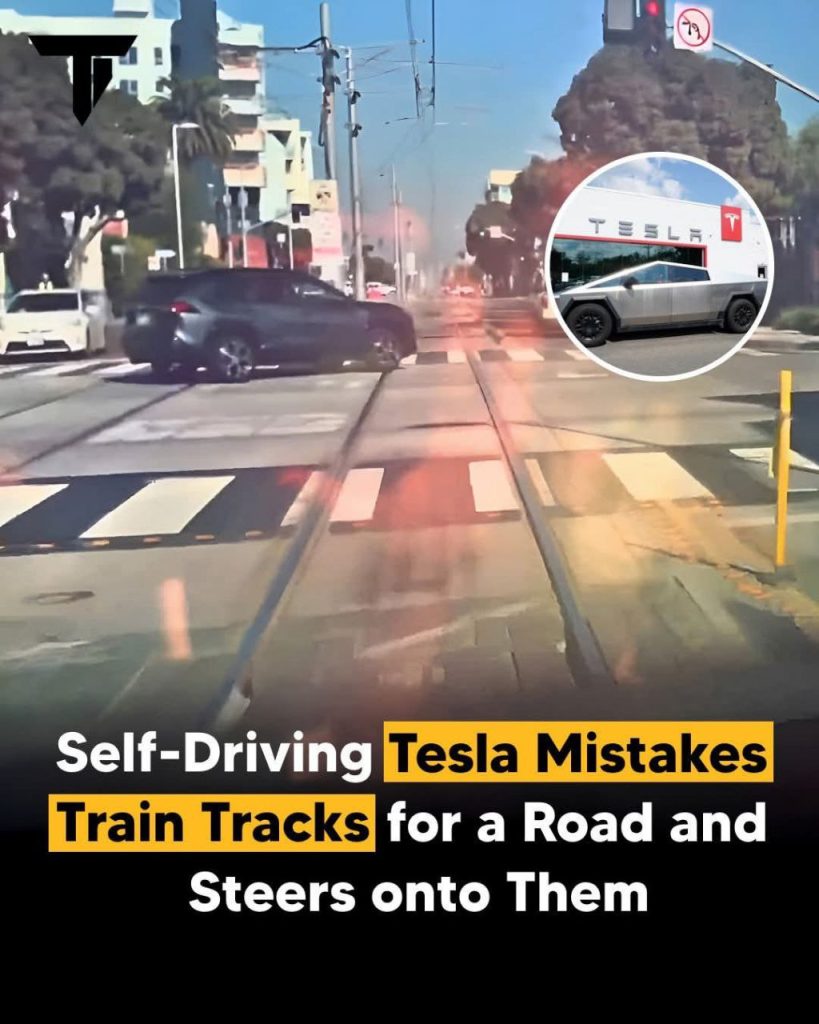

San Francisco, CA – A self-driving Tesla vehicle made headlines this week after mistakenly identifying train tracks as a roadway, causing it to steer directly onto the tracks. The incident, which was captured on dashcam footage, has reignited concerns about the reliability and safety of Tesla’s Full Self-Driving (FSD) technology.

Incident Details

The event took place in San Francisco, where the Tesla, reportedly operating in autonomous mode, veered off the road and onto an active railway crossing. Witnesses stated that the car appeared to hesitate before proceeding forward, as if confused by road markings. Fortunately, no trains were present at the time, and the driver was able to regain manual control before any collision occurred.

The footage, which quickly went viral on social media, shows the Tesla’s AI seemingly mistaking the embedded train tracks for a lane. This has led to widespread criticism, with many users questioning the software’s ability to accurately distinguish between different types of road infrastructure.

Tesla’s Response

Tesla has yet to release an official statement on the incident. However, company representatives have previously defended the FSD system, emphasizing that it is still in a beta phase and requires drivers to remain attentive at all times. The automaker has also repeatedly stated that the feature is not yet fully autonomous and that human oversight is crucial.

Despite these reassurances, the latest incident adds to a growing list of concerns over Tesla’s self-driving technology. Regulators, including the National Highway Traffic Safety Administration (NHTSA), have been closely monitoring Tesla’s autonomous features following multiple reports of erratic driving behavior, phantom braking, and accidents involving FSD-equipped vehicles.

Growing Safety Concerns

Critics argue that Tesla’s aggressive push to deploy its self-driving software on public roads poses significant risks. While the company continues to refine its AI algorithms, safety experts caution that real-world conditions are unpredictable, and the technology may not yet be capable of handling complex scenarios such as train crossings or ambiguous road markings.

Transportation analysts also point out that while Tesla’s system relies heavily on camera-based vision, it lacks the advanced LiDAR technology used by some competitors. This reliance on cameras and neural networks may contribute to instances of misinterpretation, such as the one seen in San Francisco.

Regulatory Implications

In light of this and similar incidents, regulatory bodies may push for stricter oversight of Tesla’s self-driving software. The NHTSA has already launched multiple investigations into Tesla’s driver assistance features, and this latest episode could add further pressure for increased testing and stricter deployment standards.

Consumer advocacy groups are urging Tesla to take greater responsibility and improve the system before expanding its use. Many are calling for clearer disclaimers and enhanced driver monitoring to prevent over-reliance on the technology.

Conclusion

The self-driving Tesla’s mistake serves as a stark reminder that autonomous technology is still evolving and far from foolproof. While Tesla continues to refine its FSD capabilities, incidents like these highlight the importance of maintaining human oversight and ensuring that AI-driven vehicles can safely navigate complex environments.

As investigations into the matter unfold, safety regulators, lawmakers, and Tesla enthusiasts alike will be watching closely to see how the company addresses the challenges posed by autonomous driving technology.